In order to create the template for this, I had to make a number of unfounded assumptions about how artists draw the figure. Most are drawn from personal experience. They won't work in all cases, but can be adapted to if an artist is patient enough.

In future versions, I would like to make it so that I do not have to make all of these assumptions, specifically those relating to drawing order, erasure, and multiple strokes.

I am also tossing around the idea of characters with less than four limbs, but that seems like a rare use case to be addressed later. So for now, symmetry is automatic, not optional.

Essential Assumptions: (made based on our definition of "animateable figure": - bilateral, bipedal, four-limbed)

1. Following the "line of movement" drawing principle, all characters must have spines. This defines the bilateral quality of our figure. However, thanks to our color-type system, artists can start with either the head or the spine. And limbs can be added at any point after the spine.

2. A character's legs will be closer to the root of its spine. Its arms will be closer to the head. Therefore, the upper set of limbs will be considered the arms. We are not considering creatures with tails, so we assume that the spine line will always end close to where the leg lines begin.

3. The artist will draw the character in a reference pose. While we use simple angle math to determine some rotations, We do not take into account severe limb bending or limb crossing. As we set out to make animateable figures, any posing should be added later, using the character's skeleton.

Potentially Detrimental Presumptions: (which are in place for now but should be worked out later for an actually useful product)

1. Artists never erase. The program assumes that the first line drawn for a body part is an accurate representation. This is the one presumption most detrimental to usability. Actually, it's so bad it might make the tool useless.

2. Artists always draw limbs radiating from the body. This way, the pen is put down where the limb connects to the trunk and then is lifted off at the extremity. This may not necessarily be true, especially if the limbs are bent or lifted.

3. Artists always draw flat bones starting from the skull and moving downwards, continuing with the pelvis and ribcage. This is not always the case, but most figure drawing classes teach it this way, so I think this is the least worrisome.

4. The spine is straight. Curving spines would be nice and add a lot of character, but we are also working with a 2D projection, and more personality comes from the lateral view. At the moment, we need a straight axis spine because of our calculation methods.

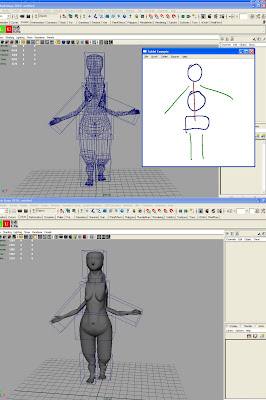

Given the aforementioned assumptions and presumptions, each stroke is saved appropriately, into its correct location. Hooray.

The next step is to analyze these strokes to acquire measurements. Salient measurements are chosen based on those listed in the paper by Mustafa Kasap and Nadia Magnenat-Thalmann, "Modeling individual animated virtual humans for crowds" and Eliot Goldfinger's "Human Anatomy for Artists: The Elements of Form." I want to combine both a graphics programming and artistic source - because if there's anything either side lacks, it's the opinion of the other.

The measurements are then put into the FigureDataNode which is used to translate, scale, and rotate boxes. Later, we will load a figure, but for now, we are using boxes as substitutes for limbs body parts. Also, because I am on the clock for tonight, I am going to ignore joints and consider the limb as one block. Therefore, the FigureDataNode should contain the following information as plugs.

For each flat shape, we have a box, whose transformations are defined as follows:

FlatBoneScaleX - flat bone width, least and greatest X values from sketch

FlatBoneScaleY - flat bone height, least and greatest Y values from sketch

FlatBoneScaleZ - flat bone depth, maintain ratio with FlatBoneX and FlatBoneY.

FlatBoneRotateX - 0.0, none.

FlatBoneRotateY - 0.0, none.

FlatBoneRotateZ - 0.0, none. (could we derive this later?)

FlatBoneTranslateX - center of flat bone, based on X and Y values from sketch.

FlatBoneTranslateY - center of flat bone, based on X and Y values from sketch.

FlatBoneTranslateZ - 0.0, none

At the moment, we are approximating flat bones with axis-aligned bounding boxes. Also, because we assume the spine is straight, we don't have to derive certain parameters such as RotateZ.

However, we have to take more care with long bones. For all these, we have moved the pivot point to one end of the bone:

LongBoneScaleX - 1.0, default.

LongBoneScaleY - long bone length, distance between curve endpoints from sketch

LongBoneScaleZ - 1.0, default.

LongBoneRotateX - 0.0, none.

LongBoneRotateY - 0.0, none.

LongBoneRotateZ - take vector from arm, find angle between arm vector and spine axis.

LongBoneTranslateX - beginning curve endpoint X, from sketch.

LongBoneTranslateY - beginning curve endpoint Y, from sketch.

LongBoneTranslateZ - none.

WaistGirth - .7*(PelvisScaleX) gives the ideal waist-to-hip ratio, a good starting point. This can be adjusted later with curves.

ScyeLength - distance between arm-trunk connection and base of skull.

ShoulderLength - distance between arm-trunk connections.

Overall height and width can be calculated by adding the heights and widths of various body parts above. More accurate measurements will be done later. This will allow us to have greater variation. For example, we can scale the top four vertices on the ribcage box to approximate the underbust. Shoulder width will be the distance between the arm-trunk connections. The scye length will be the difference between the arm-trunk connections and the base of the skull. Waist girth is tricker. For now, we will use .9*(PelvisScaleX), an ideal masculine waist-to-hip ratio. The waist is mostly fat and soft tissue, so it should be adjusted during the StyleCurve stage.

In the far future, we can make a skeleton by taking the rotation information and then calculating bone lengths based on the translation and scale.