I've been doing more research for another class I'm in, and it's turned up more interesting papers. These - especially those on modeling humans - have been much more relevant.

I'm not listing the papers I mentioned in the other post, but I'm still sorting the papers by topic.

---

Modeling Humans---

(From Range Scans)

Exploring the space of human body shapes: data-driven synthesis under anthropometric control

Brett Allen, Brian Curless, Zoran Popovic

University of Washington

(From Range Scans)

Allen, B., Curless, B., and Popovic, Z. 2003.

The space of human body shapes: reconstruction and parameterization from range scans.

ACM Trans. Graph. 22, 3 (Jul. 2003), 587-594. DOI= http://doi.acm.org/10.1145/882262.882311

(Volumetric Techniques)

Analysis of Human Shape Variation Using Volumetric Techniques

ZB Azouz, M Rioux, C Shu, R Lepage

(Head Modeling with Landmarks)

Head shop: Generating animated head models with anatomical structure

psu.edu [PDF]

K Kahler, J Haber, H Yamauchi, HP Seidel - 2002

(From 3D Scan Data with Templates)

Animatable Human Body Model Reconstruction

from 3D Scan Data using Templates

(From sizing parameters)

Seo, H. and Magnenat-Thalmann, N. 2003.

An automatic modeling of human bodies from sizing parameters. In Proceedings of the 2003 Symposium on interactive 3D Graphics (Monterey, California, April 27 - 30, 2003).

I3D '03. ACM, New York, NY, 19-26. DOI= http://doi.acm.org/10.1145/641480.641487

(Sweep-based)

Dae-Eun Hyun, Seung-Hyun Yoon, Jung-Woo Chang, Joon-Kyung Seong, Myung-Soo Kim and Bert Jüttler

Sweep-based human deformation

(Anatomy-based)

Scheepers, F., Parent, R. E., Carlson, W. E., and May, S. F. 1997. Anatomy-based modeling of the human musculature. In Proceedings of the 24th Annual Conference on Computer Graphics and interactive Techniques International Conference on Computer Graphics and Interactive Techniques. ACM Press/Addison-Wesley Publishing Co., New York, NY, 163-172.

(Morphable Faces)

Blanz, V. and Vetter, T. 1999.

A morphable model for the synthesis of 3D faces.

In Proceedings of the 26th Annual Conference on Computer Graphics and interactive Techniques International Conference on Computer Graphics and Interactive Techniques. ACM Press/Addison-Wesley Publishing Co., New York, NY, 187-194.

---

Differentiating Crowds---

(Saliency-based Variation)

Eye-catching Crowds: Saliency based Selective Variation

Rachel McDonnell, Michéal Larkin, Benjamín Hernández, Isaac Rudomin, Carol O'Sullivan

---

Animating Figures in Crowds---

(Perception of Motion)

Perception of Human Motion with Dierent Geometric Models

Jessica K. Hodgins, James F. O'Brien, Jack Tumbliny

(Sex Perception in Motion)

McDonnell, R., Jörg, S., Hodgins, J. K., Newell, F., and O'Sullivan, C. 2007.

Virtual shapers & movers: form and motion affect sex perception.

In Proceedings of the 4th Symposium on Applied Perception in Graphics and Visualization (Tubingen, Germany, July 25 - 27, 2007). APGV '07, vol. 253. ACM, New York, NY, 7-10. DOI= http://doi.acm.org/10.1145/1272582.1272584

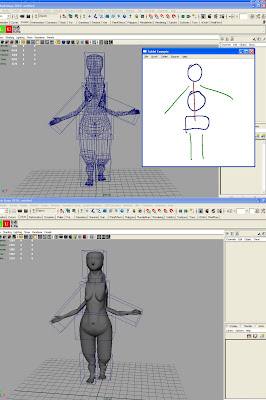

(Automatic Rigging)

Automatic Rigging and Animation of 3D Characters

Ilya Baran, Jovan Popovic

(Physics-Based Walking)

SIMBICON: Simple Biped Locomotion Control

Kangkang Yin, Kevin Loken, Michiel van de Panne

(Skin and Muscle Deformation)

Data-driven Modeling Skin and Muscle Deformation

Sang Il Park, Jessica Hodgins

(Joint-Aware Deformation)

Joint-aware Manipulation of Deformable Models

Weiwei Xu, Jun Wang, KangKang Yin, Kun Zhou, Michiel van de Panne, Falai Chen, Baining Guo

---

Rendering Crowds---

(LOD Comparative Study)

LOD Human Representations: A Comparative Study

Rachel McDonnell, Simon Dobbyn, Carol O’Sullivan

(Vein Textures)

Runions, A., Fuhrer, M., Lane, B., Federl, P., Rolland-Lagan, A., and Prusinkiewicz, P. 2005. Modeling and visualization of leaf venation patterns. ACM Trans. Graph. 24, 3 (Jul. 2005), 702-711.

(Skin Reflectance)

Analysis of Human Faces using a Measurement-Based Skin Reflectance Model

Tim Weyrich, Wojciech Matusik, Hanspeter Pfister, Bernd Bickel, Craig Donner, Chien Tu, Janet McAndless, Jinho Lee, Addy Ngan, Henrik Wann Jansen, Markus Gross

(Subsurface Scattering)

An Empirical BSSRDF Model

Craig Donner, Jason Lawrence, Ravi Ramamoorthi, Toshiya Hachisuka, Henrik Wann Jensen, Shree Nayar

(Rendering)

Drawing a Crowd

David R. Gosselin, Pedro V. Sander, and Jason L. Mitchel

(Face Cloning)

Hyneman, W., Itokazu, H., Williams, L., and Zhao, X. 2005. Human face project. In ACM SIGGRAPH 2005 Courses (Los Angeles, California, July 31 - August 04, 2005). J. Fujii, Ed. SIGGRAPH '05. ACM, New York, NY, 5.

(Skin Texture)

Multispectral Skin Color Modeling

Elli Angelopoulou, Rana Molana, Kostas Daniilidis

(Skin Texture)

Skin Texture Modeling

OANA G. CULA AND KRISTIN J. DANA

(Skin Texture)

The secret of velvety skin

Jan Koenderink, Sylvia Pont

(Skin Texture)

KRISHNASWAMY, A., AND BARANOSKI, G. 2004. A

biophysically-based spectral model of light interaction with human

skin. Computer Graphics Forum 23, 3 (Sept.), 331–340.

(Face Cloning)

Realistic Human Face Rendering for “The Matrix Reloaded”

George Borshukov and J.P.Lewis

---

Props and Clothing---

(Clothes)

Frederic Cordier, Hyewon Seo, Nadia Magnenat-Thalmann,

"Made-to-Measure Technologies for an Online Clothing Store,"

IEEE Computer Graphics and Applications, vol. 23, no. 1, pp. 38-48, Jan./Feb. 2003, doi:10.1109/MCG.2003.1159612

(Clothes)

Clothing the Masses: Real-Time Clothed Crowds With Variation

S. Dobbyn, R. McDonnell, L. Kavan, S. Collins and C. O’Sullivan

Interaction, Simulation and Graphics Lab, Trinity College Dublin, Ireland

(LOD Evaluation for Clothes Perception)

McDonnell, R., Dobbyn, S., Collins, S., and O'Sullivan, C. 2006.

Perceptual evaluation of LOD clothing for virtual humans.

In Proceedings of the 2006 ACM Siggraph/Eurographics Symposium on Computer Animation (Vienna, Austria, September 02 - 04, 2006).

Symposium on Computer Animation. Eurographics Association, Aire-la-Ville, Switzerland, 117-126.